Why HopeSeek?

For those battling complex illnesses, their loved ones are often desperate to find the best treatment options and healthcare facilities. Even the slightest glimmer of hope is enough to keep them going. In today's world, where artificial intelligence is widely used, many turn to this technology for answers. However, the responses from AI can sometimes be inaccurate, leading to what's known as AI hallucinations. HopeSeek aims to create a specialized knowledge base using trustworthy sources such as medical journals, health encyclopedias, and hospital documents. HopeSeek is a non-profit, tech-based initiative that uses artificial intelligence to provide accurate and hallucination-free responses to health-related questions. In the future, we aim to provide volunteer services to help patients find and connect with top-notch healthcare facilities.

Try the prototype of HopeSeek

How it works

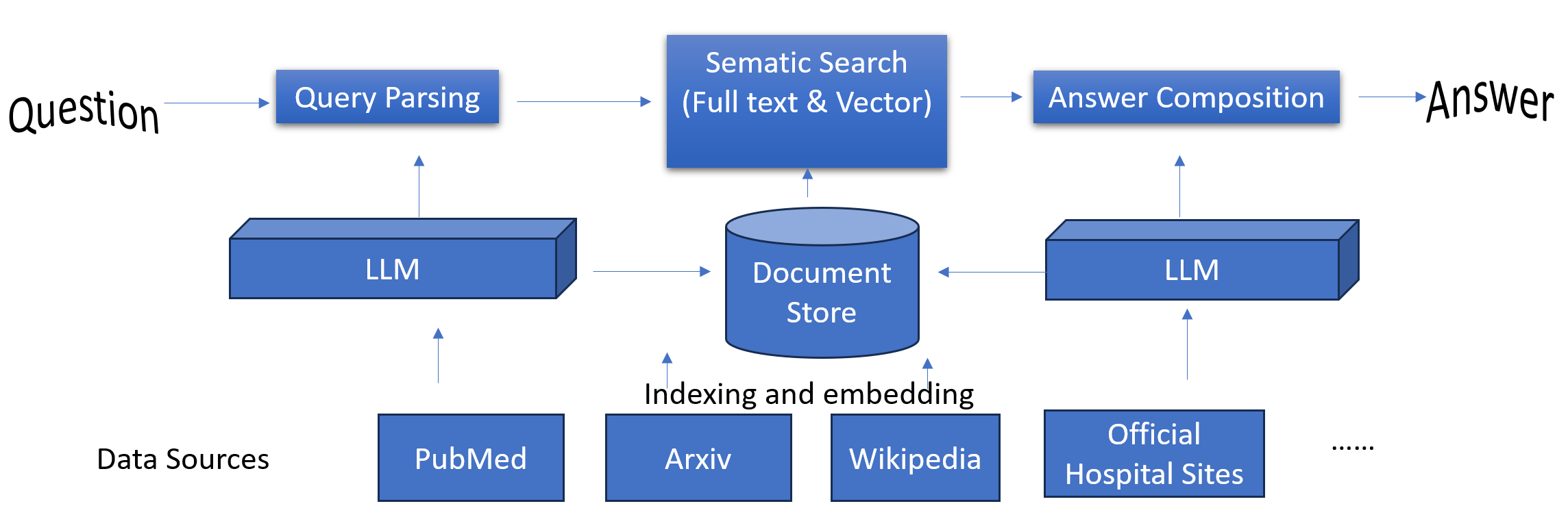

Hopeseek is a standard Retrieval-Augmented Generation(RAG) tool that uses large language models. It processes user queries, extracts key phrases, and turns them into vectors. These vectors are then matched with documents in our library to find the most semantically similar content. After ranking the search results, the large language model generates the answers. Hopeseek's document library is built from reputable sources like published papers and websites, all of which have been handpicked for their reliability. The architecture is shown as the following picture:

About AI Hallucination

AI hallucination is when an artificial intelligence model produces false or misleading data, influenced by its training rather than actual facts. This issue can happen in different AI applications, like language processing, image creation, or text prediction. AI hallucination often happens due to the restrictions in the data used for training the model, biases present in the data, or the model's failure to differentiate between correlation and causation.